The rise of Artificial Intelligence has benefitted computer experts and technology-challenged individuals alike. However, this increase in AI tools also comes with caution. While AI is helpful, it is also always learning. Users must be cautious of what information they feed their AI tool as the wrong information may risk cybersecurity threats.

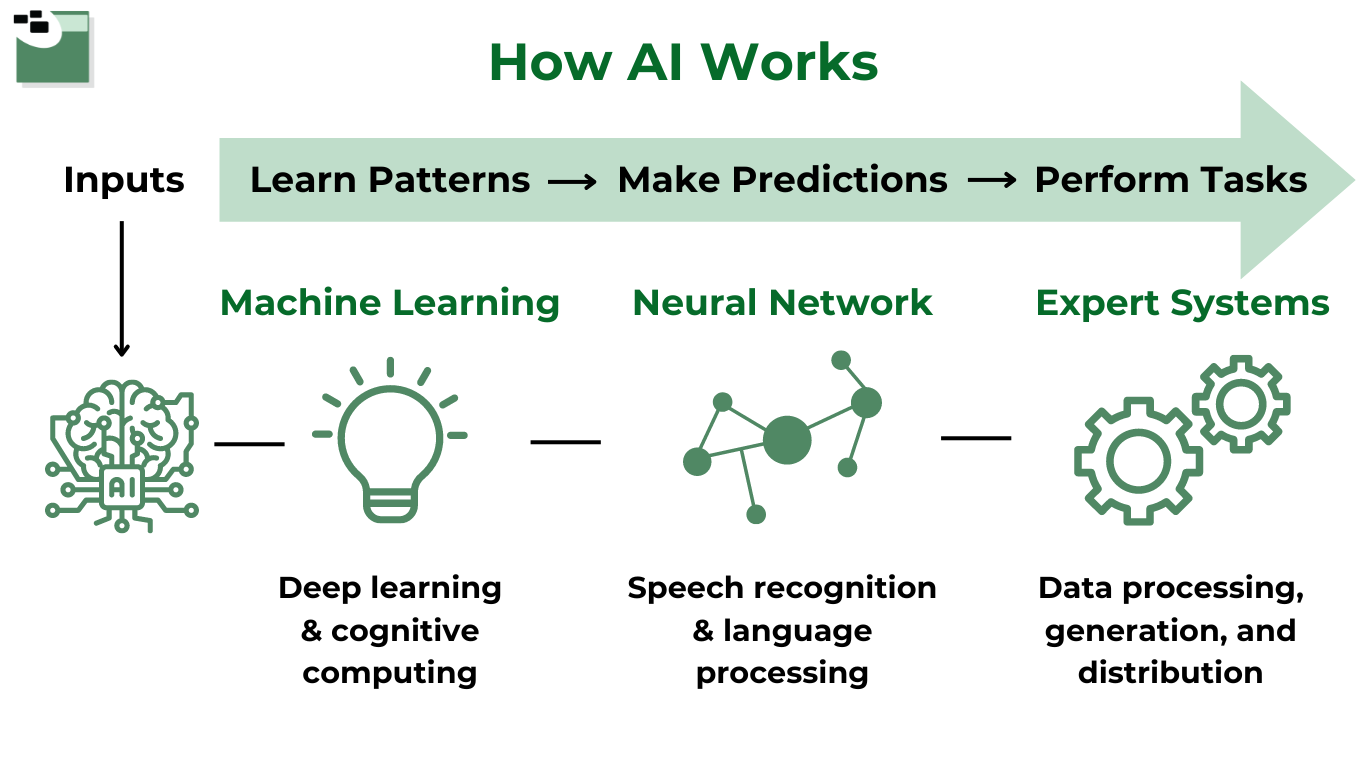

How AI Works

The dataset behind AI is vital, as AI absorbs the information it receives. Before inputting data, users should preprocess or transform it into a suitable format for training AI models. Incorrectly processed data leads to issues. The information input allows the tool to learn patterns. This is called machine learning. From this information, the tool can then make predictions and perform tasks.

Common Pitfalls with Cybersecurity and AI

AI’s learning process is efficient, however, some issues can occur. There are a few things to avoid when using AI to prevent cybersecurity risks. These are:

- Sharing Unlimited Data:Be mindful of the data you share. There are typical things that users should never share online such as addresses, phone numbers, passwords, and social security numbers. Lesser obvious data that should also stay private includes IP addresses, database names, code function names, customer names, configuration files, and license keys.

- Over-Reliance on AI: Assuming AI can not make critical mistakes and can handle all threats can lead to complacency.

- Biased or Insufficient Data: AI models must be trained with large amounts of unbiased data as small amounts of biased data lead to poor AI performance.

- Overfitting: Training that is too specific to historical data may not recognize new threats, leaving the AI vulnerable.

- Data Poisoning: Attackers can feed poisoned data into the system to degrade the AI’s performance.

Organizations can properly implement AI-enhanced cybersecurity measures when they are cautious of these pitfalls.

Use Case

If you are wondering about the damage these pitfalls can cause, Microsoft recently had a cybersecurity scare displaying how improper AI use can impact a business. Microsoft’s AI research team accidentally exposed a large amount of private cloud-hosted data on GitHub. The team member accidentally published a misconfigured link to open-source AI learning models, causing the incident.

GitHub users downloaded AI models from this cloud storage URL, but the misconfigured link granted users full control permissions. This allowed users to edit and delete existing files, rather than just viewing them. This data also included personal computer backups from Microsoft employees that contained private information like passwords and internal Teams messages. While open data sharing is an important factor in AI training, sharing larger amounts of data leaves companies exposed to cybersecurity threats. Fortunately for Microsoft, Wiz was able to quickly remove the exposed data. Luckily, the incident did not expose customer data or put internal services at risk.

Best Practices for Ensuring Cybersecurity with AI

Now that we have covered common issues and a use case displaying the results of improper AI usage, let’s explore best practices for ensuring cybersecurity with AI.

- High-Quality Data: Ensuring data used for training is high-quality and diverse, which properly prepares the AI model for different threats.

- Hybrid Approach: Combining AI with human capabilities ensures accurate and reliable security, verified by cybersecurity experts.

- Generalization: Using cross-validation or regularization ensures models generalize and adapt to new data.

- Integration: Designing AI solutions to scale efficiently and ensure they are compatible with existing cybersecurity infrastructure.

- Compliance: Incorporating privacy principles in AI design ensures the systems adhere to regulatory requirements.

- Optimization: Continuously optimizing models ensures faster threat detection.

- Model Security: Encryption and access controls protect AI models from theft or tampering.

- Testing and Benchmarking: Regularly testing AI models against new threats and establishing solid benchmarks to measure performance ensures models stay effective.

All of these practices ensure better cybersecurity for AI models, mitigating risks to the system.

Implementing AI for Cybersecurity

Cybersecurity is a key component in nearly every technological discipline. When properly implemented, AI can improve the security measures in place. When combined with human capabilities, AI helps eliminate threats. Properly trained AI models ensure better security, compliance, and adaptability for your data. If you would like to learn more about how AI can help your business’s cybersecurity implementation, contact one of our experts today.