Welcome to the Innovation Foundation. This is a four-part series, our SPK experts detail the interconnectedness of big data, cloud computing, machine learning, and artificial intelligence. Additionally, we’ll cover how they collectively contribute to improved business outcomes. And, lastly we showcase how you can lay the groundwork for innovation by leveraging these technologies and methods.

In the first part of this Innovation Foundation series, we’re focusing the foundational data concepts of these technologies, including:

- The significance of data collection.

- Review different big data platforms.

- Discuss the importance of selecting the right platform to achieve desired outcomes.

- Explore the maintenance of these platforms in the context of ML and AI.

The Power of Data Engineering

In the age of digital transformation, data and data engineering has become the lifeblood of organizations across industries. The ability to collect, store, and analyze vast amounts of information has given rise to new platforms. These platforms collate vast amounts of data that serve as the foundation for leveraging machine learning (ML) and artificial intelligence (AI) technologies.

Data collection serves as the initial step in the big data journey. Organizations gather data from various sources such as customer interactions, social media, sensors, and transaction records. The data collected can be:

- Structured data (queryable data in a fixed schema).

- Unstructured data (no structure, such as audio, video, images, and so on)

This data serves as the raw material for advanced analytics. Furthermore, it drives businesses to their own innovation foundation by:

- Gaining valuable insights.

- Making informed decisions.

- Identifying patterns and trends that drive innovation and competitive advantage.

The Innovation Foundation: What Are The Common Big Data Platforms?

Several platforms have emerged in recent years, each with its own unique features and capabilities. Here are a few noteworthy platforms:

Apache Hadoop

Hadoop, an open-source framework renowned for its distributed storage and processing capabilities. Additionally, it plays a pivotal role in managing and analyzing large datasets.

With scalability, fault tolerance, and support for structured and unstructured data it’s highly valuable for machine learning (ML) initiatives.

One compelling use case that demonstrates the symbiotic relationship between Hadoop and artificial intelligence (AI) is the implementation of AI-powered recommendation systems in e-commerce. Basically, Hadoop serves as the underlying infrastructure.

Hadoop for E-commerce

E-commerce platforms face the challenge of providing personalized recommendations to:

- Enhance user experience.

- Increase engagement.

- Drive sales.

But, by leveraging Hadoop’s distributed storage and processing capabilities, e-commerce websites can efficiently resolve this. In fact, with Hadoop, they can handle and analyze vast amounts of customer data and product information. This is crucial for building effective recommendation systems. For example, a prominent e-commerce website collects customer data, including purchase history, browsing behavior, and demographic information. This is stored and processed in a Hadoop cluster utilizing the Hadoop Distributed File System (HDFS). Essentially, Hadoop’s scalability and fault tolerance ensure efficient storage and accessibility for analysis.

The e-commerce website leverages various algorithms. For example, collaborative filtering, content-based filtering, and deep learning models. That means they can:

- Understand customer preferences.

- Identify patterns.

- Provide personalized recommendations.

With the help of Hadoop’s MapReduce and Apache Spark capabilities, relevant features are extracted and analyzed. For example, product attributes, customer ratings, and purchase histories. Therefore this enables the identification of customer segments and product affinities. Ultimately it leads to the creation of AI-powered recommendations tailored to each customer. Additionally, the integration of the front-end application with the Hadoop database enables the e-commerce website to deliver personalized recommendations. This resulted in an estimated 40% net revenue increase based on recommendation-driven purchases.

Apache Spark

Currently an open-source project, Apache Spark is a lightning-fast analytics engine that processes large-scale data in-memory. Also, it offers a unified framework for data processing, machine learning, and graph processing. That means it is highly versatile for diverse use cases.

Spark Apache For Fraud Detection

SecureBank employs Apache Spark and AI techniques to detect and prevent real-time fraudulent transactions. And, they collect extensive transaction data from various sources, leveraging a distributed storage system like Hadoop. So, by utilizing Spark’s data processing capabilities, SecureBank efficiently cleans, transforms, and analyzes the data.

Furthermore, supervised machine learning algorithms, including Spark’s MLlib algorithms like Logistic Regression and Random Forest, train an AI model.

What’s it learning? Labeled historical data, enabling the identification of patterns indicative of fraudulent behavior. And, once the model is trained, SecureBank deploys it in a Spark streaming environment to process incoming transaction data in real-time. So, as new transactions occur, Spark’s streaming capabilities allow for continuous data processing and real-time scoring. And they do this by using the trained model. Therefore, suspicious transactions that exceed certain risk thresholds are flagged as potentially fraudulent.

SecureBank regularly updates and fine-tunes the fraud detection model based on feedback and new patterns of fraudulent activities. The AI-powered system improves efficiency by:

- Reducing false positives.

- Enabling faster response times.

- Enhancing the overall security and trustworthiness of SecureBank’s services. All while using an open-source tool like Spark.

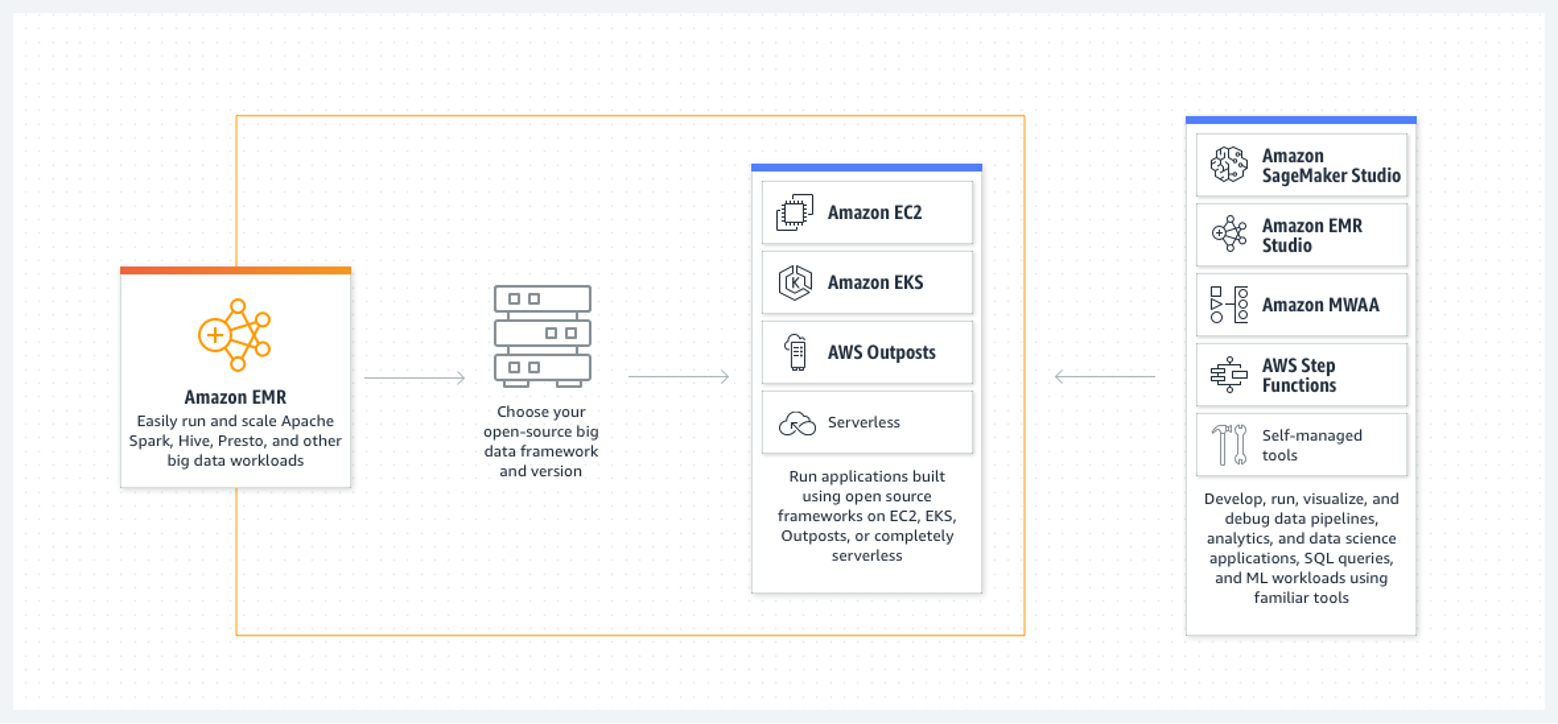

Amazon Web Services (AWS) Elastic MapReduce (EMR)

EMR is a cloud-based big data platform that leverages the power of several open source technologies. It provides scalability, security, and ease of use, enabling organizations to process vast amounts of data. And they can do this without the need for upfront infrastructure investments. Additionally, EMR has the capability to scale, and we mean SCALE! It provides petabyte-scale data processing, interactive analytics, and machine learning using open-source frameworks such as Apache Spark, Apache Hive, and Presto.

Wealthfront, an automated financial advisor software. It offers a combination of financial planning, investment management, and banking-related services. 20% of costs were saved and eight hours of data processing across 500 spark jobs by using AWS solutions, including AWS EMR.

“And because Amazon EMR is one of our main expenses, the profitability of the service was important to us,” said Nithin Bandaru, Data Infrastructure Engineer at Wealthfront.

Saving runtime was the main motivator for Wealthfront. Each of the company’s 500 data ingestion pipelines ingests data every day. In fact, across all pipelines, the company saved 8 hours of data processing per day. Ultimately amounting to a reduction of 5% of their overall costs.

MongoDB

MongoDB, is a popular NoSQL database. And it can play a significant role in supporting AI and ML initiatives. In fact, MongoDB’s flexible document-based data model allows for easy storage and retrieval of structured and unstructured data. Furthermore, it enables the storage of diverse data types, including text, images, and sensor readings. This facilitates the integration of various data sources into ML workflows.

MongoDB’s built-in features, such as Change Streams and MongoDB Atlas Data Lake, enable real-time data ingestion and processing. These are incredibly important for real-time analytics and AI applications requiring continuous data updates and immediate insights.

Another valuable feature of MongoDB is its flexible query language, JSON-like documents, and rich APIs. These make it a developer-friendly database for building AI and ML applications. Also, it means developers can quickly prototype and iterate on models using MongoDB’s intuitive interface. Ultimately, this enhances productivity and collaboration.

The Innovation Foundation: How To Select the Right Big Data Platform

As you look to build a data arsenal in hopes of building the next big AI tool, like ChatGPT (or something else!), you must select the right foundational tools. This is an innovation foundation you can take control of. Obviously, selecting the right platform is important for aligning business objectives with the desired outcomes. But, should you standardize on one tool or one platform? Fundamentally, we believe utilizing multiple technologies and strategies is the best method path to success. Here are some other factors to consider when looking for the right big data platform.

What To Look For In A Big Data Platform

- Scalability – Evaluate the platform’s ability to handle growing data volumes and accommodate future growth without compromising performance.

- Processing Capabilities – Assess the platform’s support for various data processing techniques. For example, batch processing, real-time streaming, and interactive querying, based on your organization’s requirements.

- Integration – Consider how well the platform integrates with existing systems and tools in your technology stack, ensuring smooth data workflows and minimizing disruptions.

- Maintenance – Once a platform is implemented, ongoing maintenance is essential to ensure optimal performance and maximize ML and AI outcomes.

- Data Quality and Governance – Does this platform have built-in methods to ease the need for maintenance? How about established, robust data quality processes to ensure accurate, consistent, and reliable data inputs? Implement data governance policies to maintain data integrity, security, and compliance with regulations.

- Security and Privacy – Can this platform apply your company’s specific security and privacy models to what it is doing? The goal will be to implement strong security measures to protect sensitive data from unauthorized access. That means you can ensure compliance with data privacy regulations.

The Synergy Between Big Data Platforms, ML, and AI

Big data platforms, ML and AI are intricately connected. In fact, they form a powerful synergy that drives data-driven decision-making and innovation.

Big data platforms provide the infrastructure and tools to collect, store, and process vast amounts of data. Machine Learning algorithms leverage this data to identify patterns, make predictions, and automate tasks. And, AI algorithms use ML models to mimic human intelligence and perform complex tasks. Together, these technologies enable organizations to extract valuable insights, optimize processes, and create intelligent systems that drive efficiency and competitive advantage. This interconnectedness is a transformative force shaping the future of industries globally.

Stay tuned for the next instalment of the Innovation Foundation. In part-two we will focus on the cloud platforms and technologies available. Additionally, this will include many of the services that support data, analytics, and AI/ML. We will also shed light on the commonly used AWS and Azure services. And finally, we will delve into the more advanced data engineering functionalities offered by these cloud platforms.